Using Digital Twins for Streaming Analytics

Digital twins have the ability to address a key deficiency in traditional stream-processing and complex event processing systems. These systems typically focus on extracting interesting patterns from incoming data streams using stateless applications. While these applications maintain state information about the data stream itself, they aren’t able to easily track dynamic information about each individual data source. For example, if an IoT application is attempting to detect whether data from a temperature sensor is predicting the failure of the medical freezer, it looks at patterns in the temperature changes, such as sudden spikes or a continued upward trend, without regard to the freezer’s usage or service history. However, adding dynamic information about a freezer’s recent abnormal events, door open/close actions, power fluctuations, and maintenance history would provide a much richer context for interpreting telemetry and would enable more informed predictions about possible impending failures. That’s what the real-time digital twin model can do.

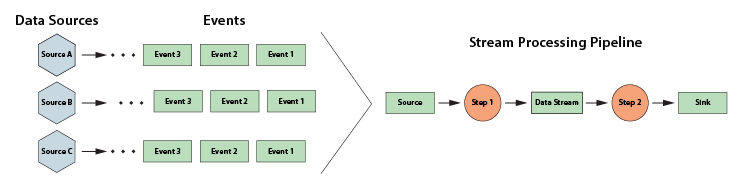

The following diagram depicts a typical stream processing pipeline processing events from many data sources in traditional software architectures:

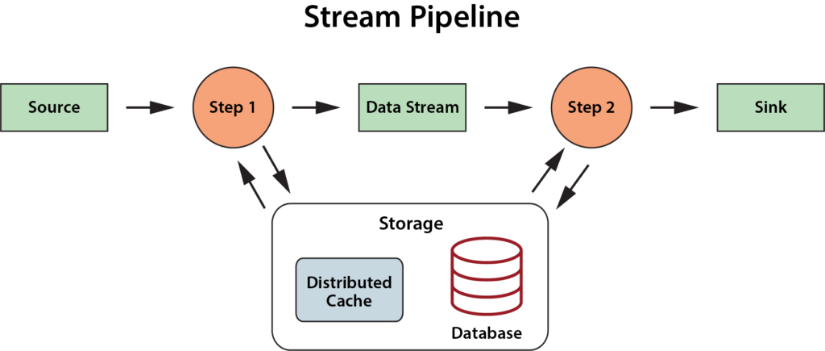

More recent stream-processing platforms, such as Apache Flink, have incorporated stateful stream processing into their architectures in the form of external key-value stores or databases that the application can make use of to enhance its analysis, as shown below. But they do not offer a specific semantic model and integrated, fast data store which applications can use to organize and track dynamic state information for each data source and thereby deepen their ability to analyze data streams.

The real-time digital twin model offers an answer to this challenge. The term “digital twin” was coined by Dr. Michael Grieves (U. Michigan) in 2002 for use in product life cycle management (PLM), and it was recently popularized for IoT by Gartner in a 2017 report. In PLM, digital twins are typically used to model the behavior of individual devices or components within a system to assist in their design and development. ScaleOut Digital Twins™ extends and adapts this concept for use in stream processing applications to enhance the analysis of incoming telemetry. In this usage, instead of emulating the behavior of devices, real-time digital twins host dynamic state information about data sources which generate event streams (including devices or other sources of telemetry) and use this state information to deepen real-time analysis and enable better feedback and alerting.

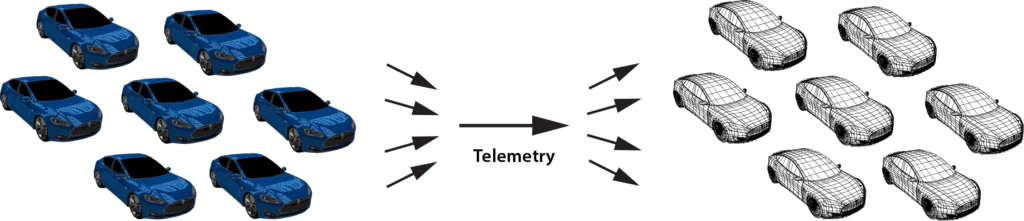

For example, using a real-time digital twin for each car in its fleet, a rental car company can separately track and analyze telemetry from each vehicle and maintain dynamic state information, such as the driver’s contract restrictions, evolving data about vehicle speed and location history, and known maintenance issues for the vehicle:

Real-time digital twins provide a powerful and intuitive approach to organizing this dynamic state data, and, by shifting the focus of analysis from the event stream to the behavior of data sources, it potentially enables much deeper introspection than previously possible. With real-time digital twins, an application can conveniently track all relevant information about the evolving state of each physical data source. It can then analyze incoming events in this rich context to provide high-quality insights, alerting, and feedback.

Real-time digital twins are well suited suited for applications with thousands or even millions of data sources, such as managing vehicle fleets, health-tracking with smart devices, security, real-time asset-tracking, and much more. Unlike traditional stream processing and streaming analytics, which cannot easily maintain and analyze dynamic information about individual data sources, real-time digital twins can separately analyze incoming telemetry from each data source, update state information, and respond in milliseconds. The underlying, in-memory computing platform takes on the responsibility of delivering incoming messages to the real-time digital twin instances, and it transaparently scales overall throughput to handle thousands of data streams.

Beyond providing a powerful semantic model for stateful stream processing, real-time digital twins also offer advantages for software engineering, such as simplifying design and reducing development time, because they can take advantage of well-understood object-oriented programming techniques. A real-time digital twin consists of two components: a class definition that defines the state data for each data source (which might include time-ordered event collections) and a method for analyzing incoming event messages and updating state data. Analytics methods can range from simple sequential code to machine-learning algorithms or rules engines. These methods also can reach out to databases to access and update historical data sets if necessary.

These two components of a real-time digital twin, the data class and the message-processing method, are called a real-time digital twin model. After the application developer implements a model, it is deployed to the cloud service using the service’s UI. (It also can be deployed to ScaleOut StreamServer running on-premises using APIs.) Once deployed, the cloud service uses this model to automatically create unique instances of real-time digital twins for all data sources in order to process incoming event messages.

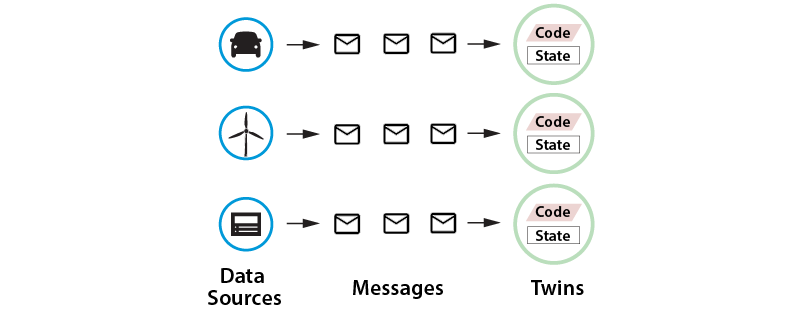

The following diagram shows three physical data sources sending messages to their corresponding real-time digital twin instances created by ScaleOut Digital Twins:

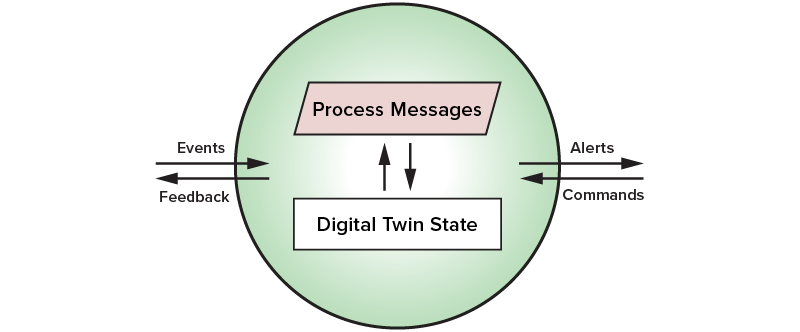

As illustrated below, a real-time digital twin instance can receive event messages from either its corresponding data source or from other digital twin instances. It also can receive command messages from other digital twin instances or applications. (The distinction between event and command messages is made by the application’s message-processing code.) In turn, it can generate alert messages to other digital twin instances or applications, and it can directly send feedback messages (including commands) to its corresponding data source:

The ScaleOut Digital Twins service automatically creates an instance of a real-time digital twin to receive and process incoming messages from the corresponding data source. Each data source is assumed to have a unique (string) identifier which is supplied within outgoing messages or by registering the data source with an event hub. To simplify application design, ScaleOut Digital Twins automatically correlates messages from a given data source for delivery to the corresponding instance; the message-handling code only receives messages corresponding to the associated data source. In large applications, ScaleOut Digital Twins may host thousands (or more) real-time digital twin instances to handle the workload from many data sources.

The granularity of a real-time digital twin model is application-defined. It can process messages from a single sensor or those from a subsystem comprising multiple sensors. The application developer makes choices about which data (and event streams) are logically related and need to be encapsulated in a single real-time digital twin model to meet the application’s goals.

In summary, real-time digital twins provide a powerful organizational tool that enables stream-processing applications to easily track and analyze the dynamic state of each individual data source instead of just analyzing the data contained within event streams. This additional context increases the developer’s ability to implement deep introspection within streaming analytics and represents a new way of thinking about stateful stream processing and real-time streaming analytics.